OpenAI Scholars Project

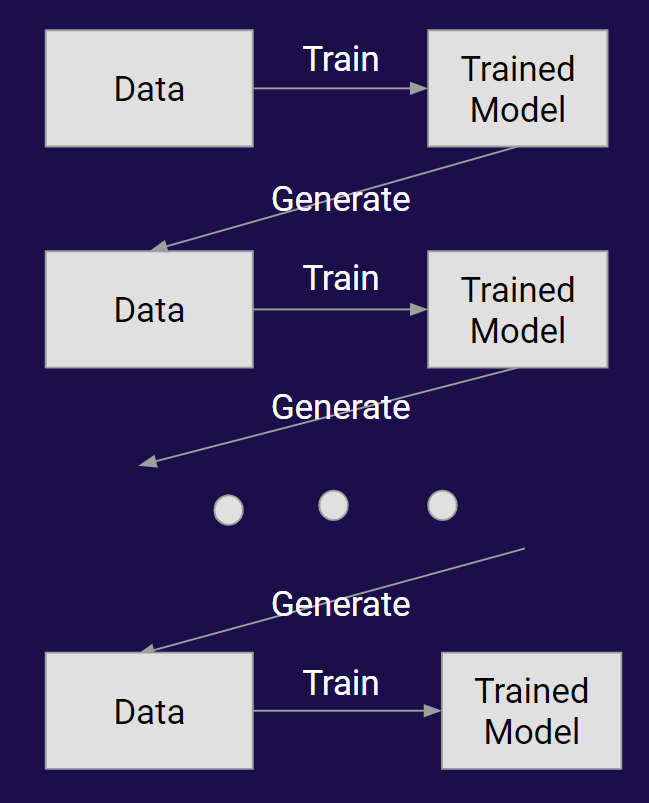

My blog post is split up into three parts: Literature Review (incomplete, this is a very large field of study): Lenses of Analysis on Opinion Spread . This post is an older version that contains more detailed discussion and why I didn't include some things (like advertising), for those that are interested. Experiments and Analysis Section: Training models on their own outputs . Speculative Section: What we'll (probably) see before we build artificial cultures . This is just some research ideas and thoughts I had after doing lots of reading, take them with a grain of salt.