A common research goal in AI is to build a virtual society that is as complex and rich and interesting as human society. Human culture seems to have this really cool open-ended nature that we haven't been able to replicate in silicon yet, and we'd like to do that.

However I wanted to get a better picture of what it'll look like when we start getting closer to human culture.

Of course, we can't know the answer, and AI tends to break our expectations. Still, I'd like to form a better hypothesis* to try and guide my future research in this direction.

The most natural thing to do is to look at what happened for humans. This is where theories of evolutionary history of culture comes in.

There's a pretty big debate about whether some of the features that were useful in cognition were done by gene-culture co-evolution or by cultural selection.

In the California School (Boyd, Richerson, Henrich, etc.), they believe it happened like milk: Some milk industry favored those that had lactose tolerant genes, which resulted in there being more lactose tolerant genes after a few generations, which resulted in more milk industry. This process is known as gene-culture co-evolution.

In the other, newer (but also older) school of thought (Heyes, Birch, etc.), they believe that these mechanisms arose purely culturally, without any underlying genetic change.

For my purposes, whoever is right doesn't really matter, with one caveat: One approach to AI is reverse engineering the brain. If Heyes et al is right, it might be more difficult for us to find a genetic component that underlies much of what it means to be human, if it turns out much of what we think are "innate human nature" are actually culturally learned things. But by examining adult human brain structure, we may still be able to see the underlying (learned) mechanisms, so this doesn't matter too much. We should just be hesitant at trying to trace them all the way back to genes.

* I don't claim that any of this is original research, it's mostly distilling things I've read and discussions I've had with others.

Many of these "pieces of culture" can actually be explained by individual learning: either using associative learning, or some other cognitive mechanisms. That's not wrong, they did originally arise due to that. However many of these techniques are very difficult to find via individual learning (see the "poverty of stimulus" arguments), which is why this process took a while. Yet, once the techniques are found, they can often be shared much easier then being reinvented from scratch. Thus, we can say that the origin of the skill for the vast majority of people is "social learning" and that the skills are "culturally learned", even if the skill has a biological location in brain where it usually shows up and was originally discovered via individual learning. By analogy, most people have Pokémon neurons in the same location in the brain, yet there clearly wasn't an evolutionary pressure that lead to having a "Pokémon" region of the brain.

Also, it's very likely that the conditions needed to get past all of these phases are very fiddly. Plenty of other animals have simple forms of cultural selection, but humans are the only one that has made it all the way to our modern form of culture. This suggests that often other concerns outweigh having proper culture, that often things are not complex enough to need culture, that diversity collapse often happens, etc. We should not expect there to be some simple formulation that gives us culture, my guess is that it's gonna be finnicky the whole way through.

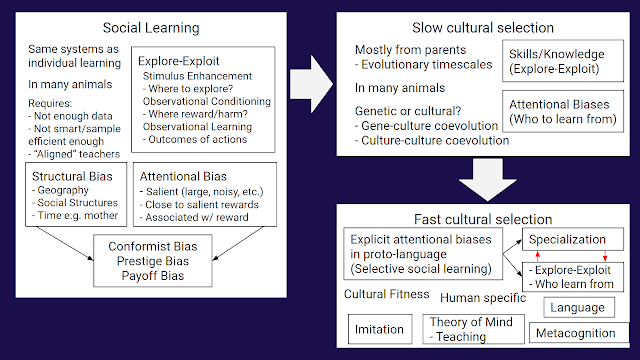

Skills and knowledge/attentional biases feedback loop

There are two pieces here: "skills and knowledge", and "who to learn from/attentional biases". Skills and knowledge are about where to explore, where you can get reward, and what the outcomes of actions are. Each of these are aspects of an explore-exploit problem, which is informative about the initial purpose of culture: to distribute relevant knowledge about a general explore-exploit problem over many thinking minds.

Once there is enough expertise, it becomes important to filter the information and spend your time learning from the people that have the most expertise. Attentional biases are these selective choices of who to pay attention to and learn from.

These points suggest that this process is pretty sensitive, as the following things would prevent culture from emerging:

- If the agents are too smart (don't need to rely on other's breakthroughs because they can make all the inferences themselves), or the environment is too easy. To prevent this, we need to set things up so that "breakthroughs" in learning happen more infrequently than single agent "lifespans" (whatever that means in your context), and agents have different enough experiences to have different kinds of breakthroughs.

- If the agents can memorize all of the relevant skills, they won't need to use language to talk to each other. We should especially expect this to be a problem if they are all using the same architecture, or if they have some fixed architecture (unless they can meta-learn learning algorithms, have few-shot learning abilities). Randomizing the environment might alternatively address this issue, but it seems like the parameters of what makes a good environment are sensitive enough that requiring a randomly generated good environment might be asking for too much.

- If the agents aren't cooperating with each other. This is only loosely necessary, as it's clearly not true IRL, but the point is that competition adds another axis to learn on that can inhibit useful information flow.

- If the environment isn't interesting enough, and new things to learn eventually stops. This is a huge research direction in itself, with all kinds of ways in which it's very sensitive. From the lens of cultural evolution, that shouldn't be too surprising. Tons of animals exhibit social learning and slow cultural selection, but humans are the only ones (so far) to be in a setting where things are tuned carefully enough that we can get fast cultural selection/"culture" to emerge.

- If you don't have a distillation process: in particular, teaching is a good way to keep the information grounded and accessible. Essentially, if you have a few individuals, they can develop their own culture and language, but it'll be very difficult for an outsider to learn, which inhibits information exchange. By requiring new generations to continually learn the information, it selects for information that is accessible and learnable, and continually distills it into a more accessible form that allows for building off of it. See the section on Language below for a concrete discussion of how this iterative process has led to the form of language we see today: it's very shaped by constraints.

- If you get horizontal transfer too early. Horizontal transfer (transfer among individuals, instead of just from parent to children) adds an additional competitive component, where ways of looking at the world need to compete for collective attention. This can mean that less helpful pieces of information that spread better end up winning out over more helpful pieces of information. If information is only being passed from a parent to a child (and slowly refined via this teaching/learning process + individual experience), fitness of the information is reflected in the fitness of the individual, and we see useful information spreading at an evolutionary timescale. In a sense, it is more difficult to "align" horizontal transfer with fitness. It should be noted that fitness still applies on a group level, but for ML it's probably more expensive to simulate entire groups and competition between them. Also, via mutation in attentional biases eventually we should expect systems to arise that protect against these unhelpful viral ideologies once individuals realize it's not helpful. However that can take time, meaning the overall process takes longer, and this added instability could cause problems.

Nature seems to have done these things, so in theory we can too. Here's what you should expect if you get them all right:

1. Agents transmit skills and knowledge

- Initially this starts out as Stimulus Enhancement, Observational Conditioning, and Observational Learning

- Once the skills and knowledge get more complex, these develop into more nuanced specifics, but are still pretty messy and not using language

- Eventually language develops to encode the skills and knowledge

Because we are much closer to human level language then we are to human level motor skills, depending on the environment it's possible that we'd see language emerge earlier.

2. Agents transmit attentional biases

- Initially this starts out as Conformist Bias, Prestige Bias, and Payoff bias

- Those are very loose, and eventually more nuanced biases develop, but these are still pretty messy and not using language

- Eventually language develops to encode attentional biases, things like "learn X from Y"

As more skills and knowledge are produced, the attentional biases get more and more important. Attentional biases and skills and knowledge produce a positive feedback loop that encourages specialization and better knowledge transfer.

I'm aware that this is fairly vague, and would like to do more reading into the intermediate pieces here, and will probably update this section in the future. I imagine that much of this will just need to be understood better by doing experimentation, using what happened in humans as a loose guide.

Details/Research Directions

That's the intuitive picture from a high level standpoint, using human culture as a reference. But what does this tell us about what we need to do? It suggests the following research directions:

- Teaching Knowledge and Skills: Get RL agents to teach other agents how to do tasks. This is sorta done via meta-learning, but getting this to happen via some kind of language would be significant progress. In addition, "stimulus enhancement" (where to explore) suggests that it would be useful if agents could use language to "hand off" their learning progress and where they think is promising to explore to other agents. These agents would then do some learning, and "hand off" their progress to a new agent, who repeats.

- Distributed work/scheduling algorithms: What happens if the agents have all the knowledge and skills? Are they done? No. Once agents have all the skills for the world, in many settings they'll need to distribute the work. Some agents do X, some agents do Y, etc. This may also include breaking down complex tasks into their component parts. Thus, there's a pretty natural research direction that involves starting with agents that are good at all the relevant tasks in a very simple world, then having them learn ways of distributing the work efficiently. This could happen via an economy, or it might happen by simpler means.

With scheduling, it's also worth thinking about the mechanisms of communication. Do they just have a single channel they can send to everyone? Or do they send to specific neighbors nearby? Some choices here will probably make the problem easier, but it's unclear to me which ones would do that.

Those two research directions can happen in parallel. They are extremely difficult open RL research problems, so it would probably be best to start with very simple grid-world or markov type settings.

- Distributed learning algorithms: Imagine we are in a setting where:

- For each task, we have at least one agent that knows how to do and teach that task

- We've solved the teaching knowledge and skills problem

Now we have a scheduling problem again, except in addition to scheduling tasks, we need to schedule the teaching of tasks. Once enough agents know how do the tasks, we can start having agents actually doing the tasks instead of teaching. This is a simpler problem because the agents don't need to invent teaching or learn to do the tasks from scratch. Learning the tasks or doing teaching can involve a large amount of training done ahead of time, or we could just abstract it away and give the agents actions of "teach task X" and "do task X". Agents would only be allowed to do and teach actions that they have been taught. This abstraction allows us to work on this distributed learning problem without having to actually solve the teaching or learning problems.

This problem is still a scheduling problem, so in some ways it's just the previous problem. Still I think it's different enough that it's worth mentioning. It might also be worth exploring some abstractions where agents can improve their abilities at certain tasks, and/or explore and look for new tasks.

When talking about these problems, if you aren't careful you can get into the debate about the contributions of individual and social learning. In particular, there are the following things:

- System 2 social learning

- System 1 social learning

- Individual learning leading to decisions about how to act socially

And there's still debate at how hardcoded social learning is (genes vs learned/culturally inherited).

For example, one has to be careful to not say "all distribution of work is decided by selective attention, which is a purely social mechanism" because that's not true. There is a social contribution: learned attentional biases that make decisions easier. But there's also an individual contribution: intentional attentional biases that an individual chooses as the result of deliberation about what they think makes sense. And maybe the learned attentional biases stem from individual learning of the social norms. It's all very messy and sort of unimportant from a ML perspective exactly how much each system contributes. However, if we completely abstract away individual learning and individual agent motivations, our systems may be weaker as a result, and might seem fairly different from human culture. A good setup uses a combination of both individual learning and social learning. For things like theory of mind, we even find that the extent to which it is deliberate vs automatic varies among cultures, so there may not be a "most humany amount" of each ingredient.

Anyway, once we feel like we have a decent handle on the above three things, we will be on our way towards making culture. We'd have:

- Agents that slowly learn to do things in the world: Explore-exploit tradeoff of finding new tasks/vs getting better at existing tasks

- Agents that are capable of teaching other agents the things they learn

- Agents that are capable of effectively scheduling the teaching and doing of tasks

This should lead to a nice positive feedback loop of specialization and effective distribution of work, as the cultural emergence literature predicts. The distributed scheduling algorithms are responsible for the "attentional biases", and the learning and teaching are responsible for the development and transfer of knowledge and skills.

A note on open-endedness and scarcity of attention

(more speculative section)

But it still seems like something is missing here. Where's the open-endedness? We've assumed that agents always have more tasks they can do, which seems like we've assumed away the problem.

I think that while it's important to have a "complex enough" environment with enough tasks to do, we won't need that much task complexity before we start seeing lots of cultural complexity. I believe this for two reasons.

First, note that optimal scheduling is NP-Hard in general. Thus, agents will be getting better and better approximations to doing this well, but they won't ever be perfect, so there is some room for open-ended improvement. This is especially true if there is some uncertainty in the duration or outcomes of certain tasks, or if part of scheduling involves learning.

Second, note that in all of the above settings, we've assumed that all agents are working towards a common goal. For humans, this is not really the case.

Fortunately, I don't actually think we need to give agents different conflicting goals. Instead, I'd argue that some part of culture involves conflict over what is the most effective "thought work" spend. This doesn't really make sense if all agents are in agreement about what the next task should be. However, if the distributed scheduling algorithm allows for some disagreement/uncertainty about promising directions or needs for resources, suddenly individual agents have an incentive to add some "viral" bits to their information. This is true even if they are all ultimately "aligned" at achieving the same end goal.

To be more concrete, imagine we have one group of agents that think the population should work on X problem the most, and one group of agents that think the population should work on Y problem the most. Agents will have some learned communication mechanisms that help them decide who should work on what tasks. In the case of a conflict, at least one of these groups won't have the outcome they think is correct, so they'll try and look for ways of signaling that they are right.

With purely rational agents, they would ideally debate with the other side and reach a consensus. However if the communication protocol is not robust, it may be vulnerable to certain attacks that are much more effective at convincing others than rational debate. We could see the development of rhetoric and politics, as adversarial attacks in communication channels. Eventually agents would develop immunities to these, and then new attacks would need to be invented. This zero-sum like game of competing for attention could provide a nice source of open-endedness in culture.

(by the way, we might expect things like this to be more likely in the case where symbols are an imperfect representation of the world the agents live in. Though, rational debate/theorem proving might be more computationally difficult for agents than just finding weakness in the channel, so this isn't strictly necessary).

Note that these arguments rely on not only developing communication protocols for running the scheduling algorithm, but also on having agents that are continually developing and learning new communication protocols. Whether through meta-learning or just continuing to optimize them, this is a harder problem than simply having learned communication protocols. However, if we only have a learned - but now fixed - communication protocol, we may miss out on this dynamic.

Still, I think that "debate" and "scheduling is difficult" may only get us so far. If we want properly open-ended culture, we need some kind of co-creation of task niches. This starts getting into all of the insights people have learned from trying to reproduce evolution, see

Self-Organizing Intelligent Matter: A blueprint for an AI generating algorithm for a good introduction to much of the literature around these issues. The "third pillar" section in

AI-GAs: AI-generating algorithms, an alternateparadigm for producing general artificial intelligence also contains some relevant discussion. Part of the point here is that while having "competing opinions on what needs to be done" seems like a good way to have open-ended development, it's analogous to "competing species". Thus, it's susceptible to the same kinds of problems of diversity collapse and a need for no objective that exist in evolution.

I think it's unfortunate that after all this thinking, I've fallen back to saying "ah, what tasks agents work on is the same problem of 'how to get open-ended

Autocatalytic sets (co-created niches)'" that exists in evolution research. This is essentially just the insight that "creativity is the same thing as evolution" which isn't really novel. Still, I've learned a lot, and this is more evidence for how important that problem really is for intelligence.

After the specialization/skills and knowledge/attentional biases feedback loop

Once we've managed to get that kind of positive feedback loop happening, we should see progressively more complex things being discussed. If our environment is open-ended enough, these should eventually develop into culture.

From here it's more speculative, but I'll briefly touch on a few things that we should expect to see.

Cognitive Gadgets is a bit outdated, but does cover these in much more detail.

Imitation: See

Imitation for a detailed discussion on this one

Meta cognition and normative cognition: Aside from cognitive gadgets, this still seems underexplored

Language: I'll spend a bit more time on this one, in particular detailing how constraints seem to lead to our form of human language. It seems very plausible to me that different kinds of constraints could lead to different kinds of languages, so we should keep in mind what constraints we are imposing if we want the languages our agents form to be roughly human-like. There are other ways people have to keep language grounded (such as alternating between pre-training on human language and whatever task you are giving them), many of which are more practical than "consider the constraints" since we haven't managed to evolve language from scratch yet.

Language

Language Acquisition Meets Language Evolution proposes that language itself develops through evolution over time. "Language is shaped by our minds". We see this in the case of simple

Pidgin languages that develop into complex

Creole languages, and we also see this in cases where a language has emerged from scratch and develops complexity over time, like with the

Nicaraguan sign language. The idea is that languages initially form as these simple things, then the process of using language and teaching it to successive generations refines the language itself. Properties of languages that make them more teachable, or that help people communicate better, are more likely to be passed on. They list four attributes that contribute to this refinement process, but I'm going to break them apart a little more and give further thoughts on them.

Constraints on the signal transmission mechanism:

Communication Bandwidth: There are only (roughly) four channels: pitch, resonance, sound type, and volume. As resonance and other similar sound characteristics varies based on biology it wouldn't be very helpful to use for language, but relative pitch changes, sound type, and volume are all used for communicating various things, and used more or less in different languages. Note that hand gestures and facial expressions give additional ways of communicating, and the usage of speech itself doesn't seem to be innate as sign languages seems to form and function just as well as speech.

Noisy signal: Different people say things slightly differently, the environment can be noisy, and some sounds sound more similar than others. This reduces the amount of information that can be transmitted in a period of time, leads to some redundancy in speaking.

These constraints lead to:

- Limitations on how much information can be transmitted in a period of time

- Communication being mostly one thing at a time

- Only a subset of the possible ways of communicating information are actually used in a language. For example, English doesn't use pitch to vary words, whereas many other languages do.

Though honestly, I don't think these constraints are super relevant because processing constraints in the next section also have the same effect and are much stronger.

Constraints on processing the signals:

Associative memory is trying its best to form associations between groups of sounds and work with executive function to draw conclusions, but it isn't perfect. Humans seem to be best at inferring grammatical rules when there is some perceptual difference between the classes.

There are a few major limitations:

Memory: We can't perfectly remember what we hear, so we have to only remember specific things on the fly, and predict what things are important to remember.

Sequential processing: Processing a sentence takes thinking effort, so we have to strategically allocate our mental efforts to understanding what is most important in a sentence. This also means that in speaking, it's important to say things in a way that can be reasonably understood by others, which often requires some context and knowledge of what they already understand.

Inference Restrictions: For example, humans seem to be much better at grouping two words in the same grammatical category when there is some audible feature that can be used for classification. In language, this often leads to different kinds of audible properties for different grammatical categories.

Thought: Our mental representations of concepts influence how we talk about them. For example, compositionality is a way of dealing with a practically infinite number of thoughts. The way we think about time influences tense, and more generally the way we think about concepts influences how we talk about them. However, most of these "thought mechanisms" predate language, and from the theory above originate in knowledge/skills and attentional biases. This one is a little too general, I'd like to break it down more at some point.

These constraints lead to:

- Language needs to be somewhat redundant and transmit info slower than can theoretically be transmitted, because processing speed is the bottleneck (which leads to all of the kinds of limitations that constraints on the signal transmission mechanism have)

- Language needs to have a reasonable structure that can be expected, so we don't need to do processing about that (grammar, syntax, morphology, parts of speech, etc.). Some aspects of this structure such as parts of speech are innate and related to how we think about things, and so are shared between languages. Other aspects of this structure are arbitrary (simply having a predictable pattern is enough, what the specific pattern is doesn't really matter, many are good) which leads to natural language variations. Many "universal" linguistic principles like pronoun binding rules can be explained from this practical perspective: for example, the pronoun binding rules help the brain figure out what pronouns are referring to. But pronoun binding rules can also be influenced by our mental concepts: in "After a wild tee-shot, Ernie found himself in a deep bunker", himself refers to his golf ball.

Finally, I spent some time reading infant language learning experiments, because that gives us a good idea about some of what the underlying mechanisms are. My impression is that our understanding is still pretty limited, and we need more robust experiments. I also spent some time looking into linguistics literature, see my

post from last year that covers some of my thinking there.

Acknowledgements: Thanks to Scott Neville, Grayson Moore, and Jeff Wu for helpful discussions

Comments

Post a Comment