What we'll (probably) see before we build artificial cultures

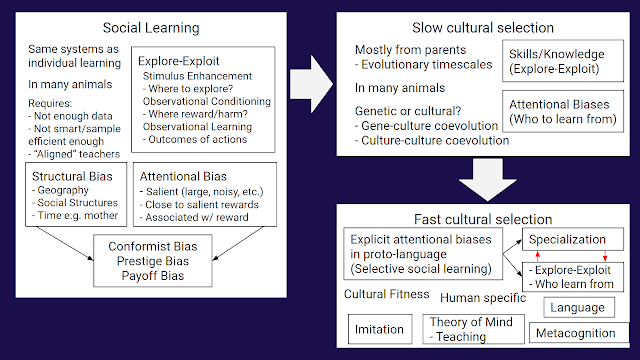

Introduction A common research goal in AI is to build a virtual society that is as complex and rich and interesting as human society. Human culture seems to have this really cool open-ended nature that we haven't been able to replicate in silicon yet, and we'd like to do that. Right now, the research has been mostly around four things: - Grounded language - Variants of cooperative and competitive games - Making agents learn in more complex environments - Teaching agents to learn learning algorithms (meta-learning) However I wanted to get a better picture of what it'll look like when we start getting closer to human culture. Of course, we can't know the answer, and AI tends to break our expectations. Still, I'd like to form a better hypothesis* to try and guide my future research in this direction. The most natural thing to do is to look at what happened for humans. This is where theories of evolutionary history of culture comes in. There's a pretty big debate...